Data Workflows

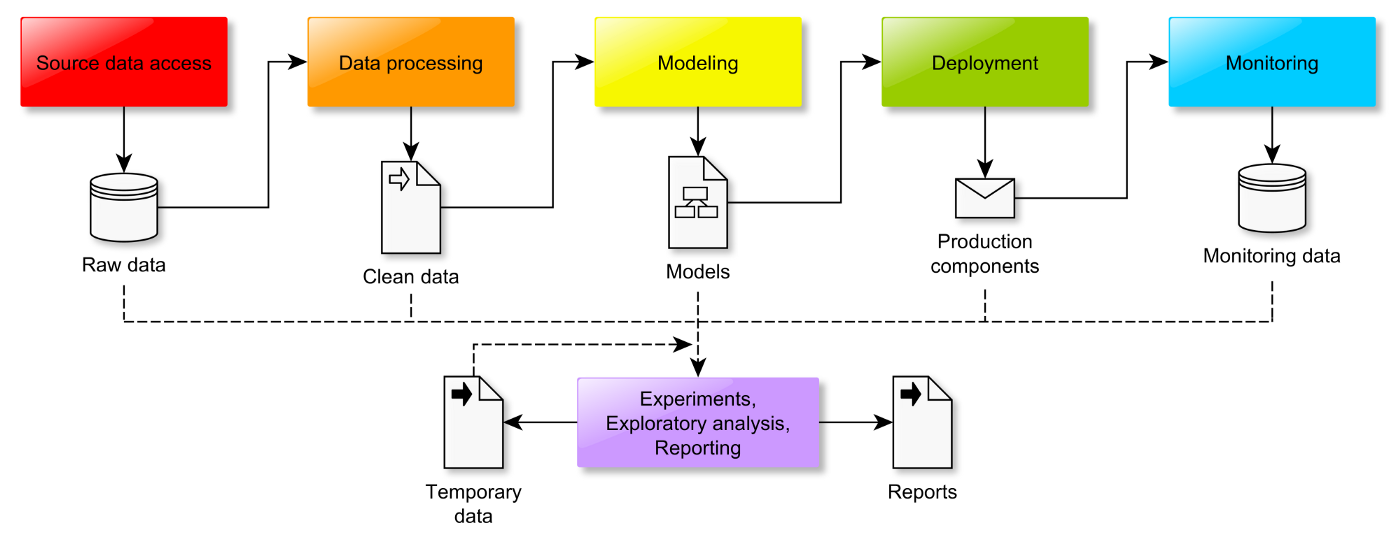

Although data science projects can range widely in terms of their aims, scale, and technologies used, at a certain level of abstraction most of them could be implemented as the following workflow:

- Connect : Variety of Data Sources

- Compose: Cleaning, Munching, Modeling

- Consume: Deployment, Monitoring, Visualization

The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer is often inserted between elements

A data pipeline is the sum of all these steps, and its job is to ensure that these steps all happen reliably to all data. These processes should be automated, but most organizations will need at least one or two engineers to maintain the systems, repair failures, and update according to the changing needs of the business.

We automate your data pipeline. Contact us for more details

Benefit of Services

Here are some obvious benefits through our approach

- Better Decision Making

- Cost Reduction

- Improved Performance

- Agile, Streamlined Execution

- Latest technology with necessary hand holding

Pipeline Components

A carefully managed data pipeline can provide organizations access to reliable and well-structured datasets for analytics. Automating the movement and transformation of data allows the consolidation of data from multiple sources so that it can be used strategically.We help you build a seamless and scalable data pipeline satisfying your data requirement

A high functioning data pipeline should support both long-running batch queries and smaller interactive queries that enable data scientists to explore tables and understand the schema without having to wait minutes or hours when sampling data.

Powered by the latest open source technologies like Hadoop, Spark, Flink and Kafka we help you build systems of scale. By providing right combination of hardware, storage and software, the latest and right sustainable architecture will be built by our architects. To ensure continuity, we provide all necessary training to equip your existing engineers to maintain the systems.

This is a deployment model for data processing that organizations use to combine a traditional batch pipeline with a fast real-time stream pipeline for data access. It is a common architecture model in IT and development organizations’ toolkits as businesses strive to become more data-driven and event-driven in the face of massive volumes of rapidly generated data.